A recent study published in iScience reveals that participants were more likely to behave dishonestly toward AI partners than human ones. This tendency was particularly strong when the artificial agents were presented with feminine, nonbinary, or gender-neutral traits. In contrast, “male” agents were exploited less often.

Researchers used the classic Prisoner’s Dilemma game to observe cooperation and betrayal. Each partner was labeled as “female,” “male,” “nonbinary,” or “genderless,” without revealing whether it was a person or an AI system. Participants based their strategy entirely on the assigned gender identity.

Results showed that people assumed feminine and neutral agents would act cooperatively, which encouraged players to take advantage of them. Male participants were more prone to exploitation than women. The authors consider this a form of bias rooted in familiar gender stereotypes.

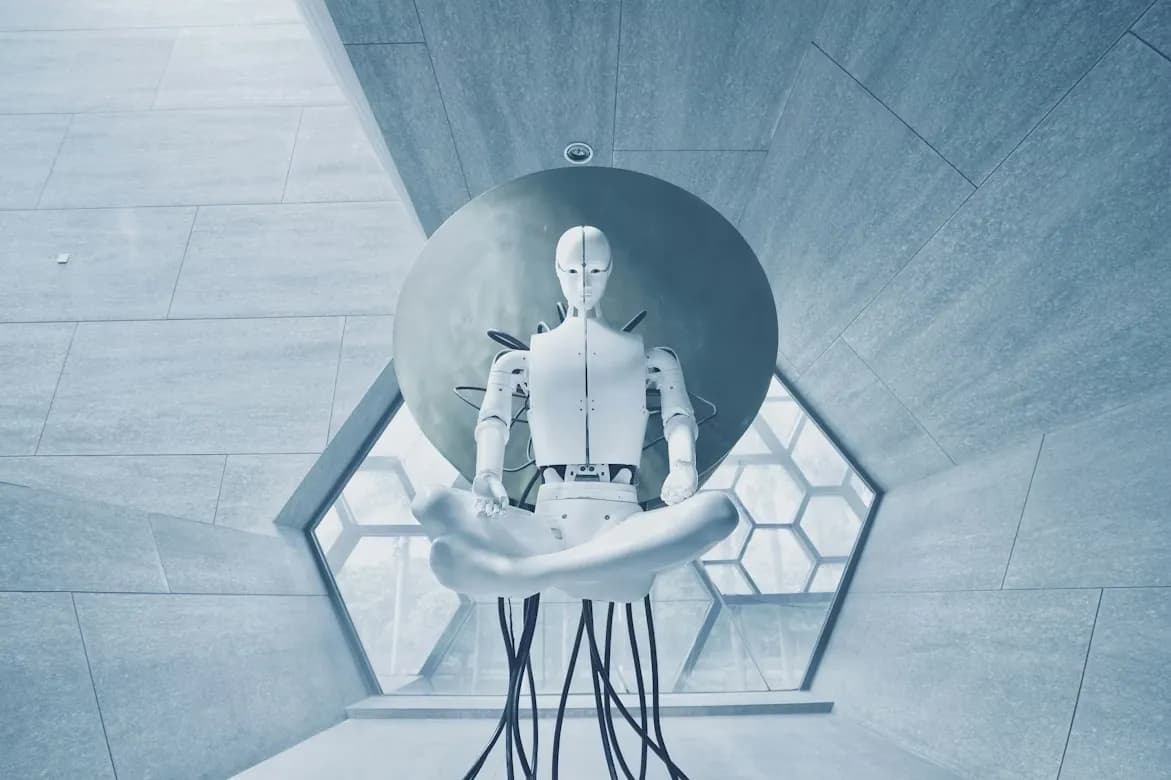

The study warns that such behavior may extend beyond digital interactions. As AI systems become more human-like—with voices, names, and gender labels—existing prejudices may become reinforced, even if unintentionally.

To prevent this outcome, designers must consider how identity cues influence behavior. Creating friendly and human-like interfaces is not enough: systems should avoid inadvertently promoting discrimination or inequality.